One of the most striking differences between monkeys and other primates on the one hand and apes on the other is that—with a few exceptions—other primates have tails but apes don’t.

A new paper in Nature, which is really cool, investigates the genetic basis for the loss of tails in apes. (The phylogeny below shows that the primate ancestor had a tail, and it was lost in apes.)

Why did apes lose their tails? We don’t know for sure, but it may be connected with the facts that apes are mostly ground-dwellers and a tail would be an impediment for living on the ground and moving via knuckle-walking or bipedal walking. (The gibbon, considered an ape that branched off early from the common ancestor with monkeys, is an exception, as it’s mostly arboreal. Gibbons move by swinging from branch to branch but they have no tails However, this form of locomotion, called brachiation, really doesn’t require a tail for grasping or balance.) I suspect that because apes who move in these ways don’t need tails makes it disadvantageous to have a tail: it’s metabolic energy wasted on an appendage that you don’t need, and one that could get injured. Thus natural selection likely favored the loss of a tail.

Regardless, the new Nature paper, which you can access below (pdf here, reference at bottom), involves a complex genetic analysis that pinpoints one gene, called Tbxt, as a key factor in tail loss. By genetically engineering the tail-loss form of the gene from apes and putting it into mice, they found that the mice engineered to have the ape form of the gene either had very short tails or no tails at all. But I’m getting ahead of myself.

Click to read:

First, here’s a phylogeny of the primates from the paper. Apes diverged from monkeys (or rather “other monkeys”, since apes can be considered a subgroup of monkeys) about 25 million years ago. The tailless apes are shown in blue, with the common ancestor of Old World monkeys and apes shown about 25 million years ago.

How can you find the genes that are involved in tail loss in apes? The best way to do it, which the authors used, is to first look for mutations in primates that cause loss or shortening of the tails, and then see whether the forms of those genes differ between apes and monkeys. Xia et al. looked at 31 such genes but didn’t find any genes whose forms were concordant with tail loss.

They then went on to mice, looking another 109 genes associated with tail loss or reduction in the rodents. Here they found one gene, Tbxt, that had an unusual form in all apes that was lacking in other primates. Tbxt, by the way, is a transcription factor: a gene that produces a protein that itself controls the action of other genes, regulating how and whether they are transcribed, that is, how these other genes make messenger RNA from the DNA. (Messenger RNA, as you know, is then “translated” into proteins.)

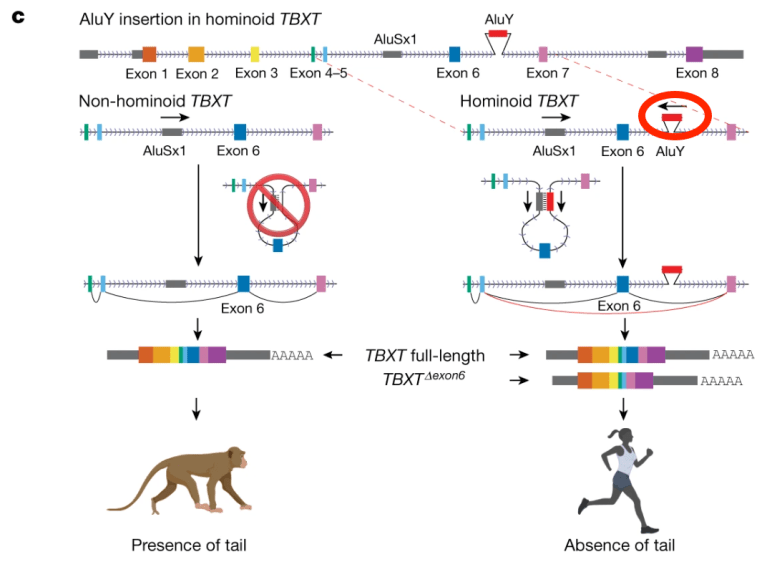

And this transcription factor had an unusual feature in apes but not in other primates: it contained a small sequence called Alu, about 300 base pairs long, that was inserted into the DNA of the Tbxt gene, but in a noncoding region (“intron”) separating the coding regions of Tbxt that make the transcription-factor protein. (Genes are often in coding segments, or exons, separated by introns, and the exons are spliced together into one string before the mRNA goes off to make protein.)

Only primates have Alu elements; they formed by a genetic “accident” about 55 million years ago and spread within genomes. We humans have about one million Alu elements in our genomes, and sometimes they move around, which gives them the name “jumping genes.” They are often involved in gene regulation, but can also cause mutations when they move, since they seem to move randomly.

Here’s a diagram of a monkey Tbxt gene on the left and the human version on the right. Note that in both groups the gene has coding regions, which are spliced together when mRNA is made to produce the full transcript. But note that in humans there is an Alu element, “AluY” stuck into the gene between Exon 6 and Exon 7. I’ve put a red circle around it. This inserted bit of DNA appears to be the key to the loss of tails. (Note the nearby Alu element AluSx1 in both groups.)

Here’s why the authors singled out the Tbxt gene as a likely candidate for tail loss? This is from the paper:

Examining non-coding hominoid-specific variants among the genes related to tail development, we recognized an Alu element in the sixth intron of the hominoid TBXT gene (Fig. 1b). This element had the following notable combination of features: (1) a hominoid-specific phylogenetic distribution; (2) presence in a gene known for its involvement in tail formation; and (3) proximity and orientation relative to a neighbouring Alu element. First, this particular hominoid-specific Alu element is from the AluY subfamily, a relatively ‘young’ but not human-specific subfamily shared among the genomes of hominoids and Old World monkeys. Moreover, the inferred insertion time—given the phylogenetic distribution (Fig. 1a)—coincides with the evolutionary period when early hominoids lost their tails. Second, TBXT encodes a highly conserved transcription factor crucial for mesoderm and definitive endoderm formation during embryonic development. Heterozygous mutations in the coding regions of TBXT orthologues in tailed animals such as mouse, Manx cat, dog and zebrafish lead to the absence or reduced forms of the tail, and homozygous mutants are typically non-viable.

In other words it matches the distribution of tails or their absence, mutations in the gene affect tail lengths in mice, the insertion is about the same age as the common ancestor of apes and other primates (25 myr), its function at least suggests the potential to affect tail length, and, finally, mutations of the gene in other animals result in taillessness, including producing MANX CATS. Here’s a tailless Manx male.

But the real key to how this form of the gene causes tail loss rests in another speculation: there is another Alu element (“AluSx1” in both figures) which is inserted backwards in the same gene, lying between coding regions (exon) 5 and 6. The new AluY element is of a similar sequence to the old one, but in reverse orientation. So, when the Tbxt gene is getting ready to form mRNA, the two Alu elements pair up, which makes a loop of DNA between them that is simply spliced out of the mRNA sequence.

Here’s a diagram of that happening. Note the loop formed at top right by the pairing of the two Alu elements (red and dark gray), a loop that includes a functional part of the gene (exon 6 in royal blue). When the transcript of this gene is made, the code from exon 6 is simply cut out of the mRNA. This produces an incomplete protein product that could conceivably affect the development of the tail.

But does it work that way?

The authors did two tests to show that, in fact, removal of exon 6 in mice does shorten their tails, and in some cases can remove them completely.

The first experiment simply involved inserting a copy of Tbxt missing exon 6 into mice (they did this without the complicated loop-removal mechanism posited above). Sure enough, mice with one copy of this exon-missing gene showed various alterations of the tail, including no tails, short tails, and kinked tails.

This shows that creating the putative product of the ape loop-formation process, a Tbxt gene missing exon 6, can reduce the tail of mice.

But then the authors went further, because they wanted to know whether putting both the Alu elements AluSx1 and AluY into mice in the same positions they have in primates could produce reduced tails in mice via loop formation. They did this using a combination of CRISPR genetic engineering and crossing, for mice having two copies of the Tbxt gene that forms loops and excise exon 6 turn out to be lethal. Viable mice have only one copy of the loop-forming gene.

And when they engineered mice having one copy of the normal Tbxt gene and one engineered copy with the two Alu elements whose pairing eliminated exon 6 (they showed this by sequencing), lo and behold, THEY GOT TAILLESS MICE! Here’s a photo of the various mice they produced. The two mice on the right have a single copy of the engineered gene with reversed Alu elements that produces a transcript missing exon 6. They are Manx mice! They have no tails! They are bereft of caudal appendages!

This complicated but clever combination of investigation and genetic engineering suggests pretty strongly that tail loss in apes involved the fixation of a mutant Tbxt gene that reduced tails via snipping out of an exon. This is not a certainty, of course, but the data are supportive in many ways.

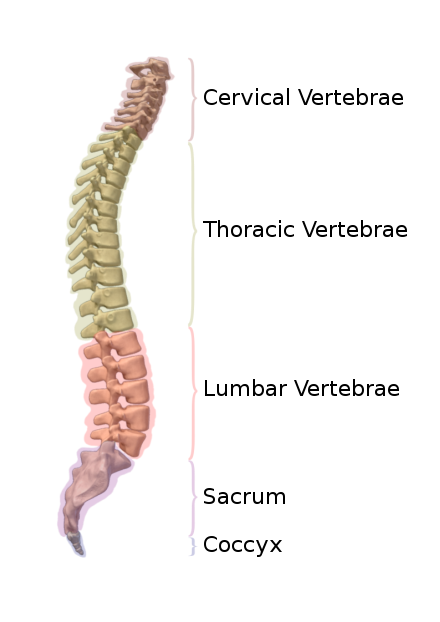

So is this likely one mutation that caused apes, over evolutionary time, to lose their tails (we have only a small tail (“coccyx”), consisting of 3-5 fused caudal vertebrae, as shown below in red in the second picture (both are from Wikipedia)

Our tail, in red:

Now if this gene was indeed involved in the evolutionary loss of tails in apes, it would constitute a form of “macromutation”: a character change of large effect due to a single mutation. But surely more genes were involved as well. For one thing, even a single copy of this gene causes neural-tube defects, so any advantage of a smaller tail would have to outweigh the disadvantage of the possibly producing a defective embryo or adult. Also, even if this gene is responsible for the missing or tiny tails of apes, there are likely other genes that evolved to further reduce the tail and to mitigate any neural-tube problems that would arise. (Evolution by selection is always a balance between advantageous and deleterious effects: it was advantageous for us to become bipedal, but that came with the bad side effects of bad backs and hernias).

I really like this paper and have no substantial criticisms. The authors did everything they could to test their hypothesis, which stood up well under phylogenetic, temporal, and genetic analysis. We can’t of course be absolutely sure that the insertion of the AluY element helped the tailed ancestor of apes lose their tails, but I’d put my money on it.

What’s further appealing about this paper is that the genetic underpinning of the tail loss was completely unpredictable: the function of a gene was changed (and its phenotype as well) simply by the insertion of a “jumping gene” into a noncoding part of a functional gene. That formed a loop that caused a cut in the gene that, ultimately, affected tail formation. Apes with smaller tails presumably had a reproductive advantage over their bigger-tailed confrères, but the genetics of it is complex, weird, and wonderful.

h/t: Matthew

Reference: Xia, B., Zhang, W., Zhao, G. et al. On the genetic basis of tail-loss evolution in humans and apes. Nature 626, 1042–1048